Synopsis

I believe estimation, and the way it’s regularly misused, is at the root of the majority of software project failures. In this article I will try to explain why we’re so bad at it and also why, once you’ve fallen into the trap of trying to do anything but near future estimation, no amount of TDD, Continuous Delivery or *insert your latest favourite practice here* will help. The simple truth is we’ll never be very good at estimation, mostly for reasons outside of our control. However many prominent people in the Agile community still talk about estimation as if it’s a problem we can solve and whilst some of us are fortunate to work in organisations who appreciate the unpredictable nature of software development, most are not.

Some Background

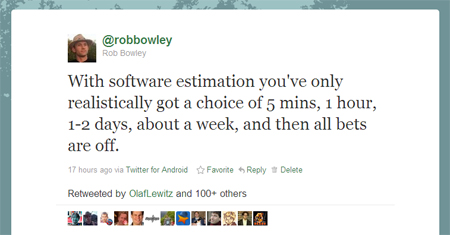

I recently tweeted something which clearly resonated with a lot of people:

It was in response to this article Mike Cohn posted proposing a technique for providing estimates for a full backlog of 300(!) stories when you haven’t yet gone any historical data on the team’s performance. The article does provide caveats and say it isn’t ideal, but I think it’s extremely harmful to even suggest that this is something you could do.

I’ve long had a fascination with estimation and particularly why we’re so bad at it. It started when I was involved in a software project which, whilst in many respects was a success, was also a miserable failure resulting in a nasty witch hunt and blame being put at the foot of those who provided the estimates.

I’ve previously written about the estimation fallacy, that formative experience, and ran my “Dealing with the Estimation Fallacy” session at 2/3 conferences including SPA 2009. There are also plenty more estimation related articles in the archive.

Why we’ll always be crap at estimating

“It always takes longer than you expect, even when you take into account Hofstadter’s Law.”

– Douglas Hofstadter, Godel, Escher, Bach: An Eternal Golden Braid

Since my rocky experiences with estimation and my subsequent research into the area I’ve gone to great lengths to try to improve the way estimations occurs. At my current organisation we collect data from all our teams on how long work items take (cycle time) and have this going back over two years. It’s a veritable mountain of data from which we’re able to ascertain the average and standard deviation for cycle times.

Right?

Wrong.

We were finding that unless we were talking about stuff coming up in the next few weeks – by which point we’d generally done more detailed analysis – we were either at the far end of the estimation range or missing the predicted milestones dates altogether. It was almost always taking longer than we expected. You could argue that it was poor productivity on the behalf of the developers, but the projections were based on their previous performance so it’s a difficult one to argue, especially as our data was also suggesting that the cycle times for work items had actually been going down!

There are two main reasons things were taking longer than expected:

1. Cognitive Bias

From Wikipedia: “Cognitive bias is a general term that is used to describe many observer effects in the human mind, some of which can lead to perceptual distortion, inaccurate judgment, or illogical interpretation”.

In terms of estimation, cognitive bias manifests itself in the form of Optimism Bias: “the demonstrated systematic tendency for people to be overly optimistic about the outcome of planned actions”.

There is also Planning Fallacy: “a tendency for people and organizations to underestimate how long they will need to complete a task, even when they have past experience of similar tasks over-running”.

It’s a huge problem, especially when we’re trying to do long range high level estimation. Our brains are hard-wired, not only to be optimistic about our estimates, but also to tend towards only thinking of the best cases scenarios. There is very little we can do about this apart from be aware it happens.

It’s only when you get round to doing the proper analysis of the work that you start seeing other things you need to do. I remember Dan North once saying in a presentation that estimation is like “measuring the coast of Britain”, the closer you look the more edges you’ll see and the longer it gets.

2. “Known unknowns and unknown unknowns”

Donald Rumsfeld got soundly mocked for his infamous “unknown unknowns” quote, but he was right. With software development, just like any Complex Adaptive System, there are many things you simply cannot plan for. We can at best be aware there will be pieces of work we’ve not accounted for in our estimations (known unknowns), but we’ve got no way of knowing how much work they’ll represent. The best we can do is know they’re there. The unknown unknowns? Well they’re even harder to predict 🙂

Donald Rumsfeld got soundly mocked for his infamous “unknown unknowns” quote, but he was right. With software development, just like any Complex Adaptive System, there are many things you simply cannot plan for. We can at best be aware there will be pieces of work we’ve not accounted for in our estimations (known unknowns), but we’ve got no way of knowing how much work they’ll represent. The best we can do is know they’re there. The unknown unknowns? Well they’re even harder to predict 🙂

Why estimation can be so harmful

Estimates are rarely ever just estimates

There is always a huge amount of pressure to know when things will be done and with good reason. People want to be able to do their jobs well and it’s hard if they can’t plan for when things they’re dependent upon will be ready. This is why an estimate is rarely ever “just” an estimate. People use this information, otherwise they wouldn’t be so keen to know in the first place. You can tell them all you like that they can’t be relied upon, but they will be. As Dan North recently tweeted, “people asking for control or visibility really want certainty. Which doesn’t exist”.

Some of us are fortunate to breath the rarefied air of an enlightened organisation where the unpredictable nature of software development is generally accepted, but lets face it, most are not.

Re-framing the definition of success (or highlighting the failure of estimation)

The most significant impact of providing estimates is that as soon as they’re in the public domain they have a habit of changing the focus of the project, especially when dates start slipping. Invariably a project of any reasonable duration, which started with the honourable objective of solving a business problem, quickly changes focus to when it will be completed.

Well, there’s actually a bigger problem here already. For most organisations the definition of success remains “on time and on budget”, not whether the project has made money, or improved productivity, or brought in new customers. It is often said the vast majority of software projects are considered failures and the Standish Choas Report is usually cited here. The problem is even they define success as meeting cost and time expectations. All this report is highlighting is the continued failure of estimation.

At best providing long range estimates just supports the “on time, on budget” mentality. At worst it takes projects started with the best intentions and drags them back down to this pit of almost inevitable failure and you – as the provider of the estimate – along with it.

The consequences…

All the typical issues dogging software development raise their heads when estimation lets us down. It’s the primary cause of death marches. Corners get cut, internal quality compromised (at the supposed sake of speed), people start focusing more on how the team works, pressure mounts to “work faster”, moral drops and so on – all resulting in a detrimental impact to the original objective (unless it was to be on time and on budget of course, but as we know even then…).

It’s a vicious cycle repeated in organisations all over world, over and over again. It’s my strong belief that the main reason it keeps occurring is because we can’t escape our obsession with estimation.

“But we can’t just not provide estimates”

This is the typical response when I say we should start being brave and just saying no to requests to provide estimates on anything more than 1 or 2 months (at most) work. My answer to that is if there’s as much chance of you coming up with something meaningful by rolling some dice or rubbing the estimate goat then what purpose are you satisfying by doing so?

What’s the least worst situation to be in? Having an uncomfortable situation with your boss now or when – based on your estimates – the project’s half a million over budget and 6 months late?

Conclusion

Some people still seem to want to cling on to the idea that estimation is still a valuable activity and particularly that we are able to provide meaningful long range estimates, but as I’ve explained here it’s a fools errand. My advice to anyone who’s put in the situation of providing long range estimates is to be brave and simply say it’s not something we can do, and then do your best to explain why. It’s against the spirit of Agile software development, but that’s not even the point, mostly it’s just in no one’s best interests – well, unless you’re a software agency who rely on expensive change requests to keep the purse full, but that’s a whole different story.

Epilogue – what do we do instead?

It’s obviously no good to just say “no” and walk away and I’m certainly not advocating that, but it’s also not the point of this article to try and explain what the alternatives are. There are a plethora of options out there, especially in Agile & Lean related literature and from well known Agile and Lean proponents, but crikey, if there’s one thing that everyone within our passionate communities can agree it’s that the best software comes from iterative, feedback driven development, something which is completely at odds with the way estimation is currently being used by most organisations.

Hi Rob,

Nice post. I’m not going to rekindle the same debate that has already on above, but I’d like to extend the discussion to the more micro estimates that go into building a burn down chart. I had an exchange with Mike Cohn recently (http://www.mountaingoatsoftware.com/blog/sprint-backlog-sums-all-work-remaining) in response to my post where I suggested we should “burn the burn down” (see http://scrumandkanban.co.uk/burning-the-burndown-chart/).

Of be interested to hear your thoughts.

Pingback: Realistic and Practical Story Estimation | OpenView Blog

Pingback: The Hardest Challenges of Implementing Scrum, #4: Realistic and Practical Estimation - Welcome to Openview Labs