The recent DORA 2025 State of AI-Assisted Software Development report suggests that, today, only a small minority of the industry are likely to benefit from AI-assisted coding – and more importantly, avoid doing themselves harm.

The report groups teams into seven clusters to show how AI-assisted coding is shaping delivery. Only two – 6 (“Pragmatic performers”) and 7 (“Harmonious high-achievers”) – are currently benefitting.

They’re increasing throughput without harming stability – without an increase in change failure rate (CFR) i.e. they’re not seeing significantly more production bugs, which would otherwise hurt customers and create additional (re)work.

For the other clusters, AI mostly amplifies existing problems. Cluster 5 (Stable and methodical) will only benefit if they change how they work. Clusters 1–4 (the majority of the industry) are likely to see more harm than good – any gains in delivery speed are largely cancelled out by a rise in the change failure rate (CFR), as the report explains.

The report shows 40% of survey respondents fall into clusters 6 and 7. Big caveat though: DORA’s data comes from teams already familiar with DORA and modern practices (even if not applying them fully). Across the wider industry, the real proportion is likely *half that or less*.

That means around three-quarters of the industry are not yet in a position to realistically benefit from AI-assisted coding.

For leaders, it’s less about whether to adopt AI-assisted coding, and more about whether your ways of working are good enough to turn it into an asset, rather than a liability.

Does the “lethal trifecta” kill the idea of fully autonomous AI Agents anytime soon?

I don’t think people fully appreciate yet how agentic AI use cases are restricted by what Simon Willison coined the “lethal trifecta”. His article is a bit technical so I’ll try and break it down in more layman’s terms.

An AI agent becomes very high risk when these three things come together:

- Private data access – the agent can see sensitive information, like customer records, invoices, HR files or source code.

- Untrusted inputs – it also reads things you don’t control, like emails from customers, supplier documents, 3rd party/open source code or content on the web.

- The ability to communicate externally – it has a channel to send data out, like email, APIs or other external systems.

Each of those has risks on its own, but when you put all three together it creates a structural vulnerability we don’t yet know how to contain. That’s what makes the trifecta “lethal”. If someone wants to steal your data, you have no effective way to stop them. Malicious instructions hidden in untrusted inputs can trick the agent into exfiltrating (sending out) whatever private data it can see.

If you broaden that last point from “communicate externally” to “take external actions” (like sending payments, updating records in systems, or deploying code) the risk extends even further – not just leaking data, but also doing harmful things like hijacking payments, corrupting information, or changing how systems behave.

It’s all a bit like leaving your car running with the keys in the ignition and hoping no one crashes it.

Where this matters most is in the types of “replace a worker” examples people get excited about. Think of:

- an AI finance assistant that reads invoices, checks supplier sites, and then pays them

- a customer support agent that reads emails, looks up answers on an online system and then issues refunds

- a DevOps helper that scans logs, searches the web for known vulnerabilities or issues, and then pushes config changes

All of those tick all three boxes – private data, untrusted input, and external actions – and that makes them unsafe right now.

There are safer uses, but they all involve breaking the loop – for example:

- our finance bot only drafts payments for human approval

- our support agent can suggest, but doesn’t issue refunds

- our DevOps helper only runs in a sandbox (a highly isolated environment)

Unless I have got this wrong, until we know how to contain the trifecta, the glossy vision of fully autonomous agents doesn’t look like something we can safely build.

And it may be that we never can. The problem isn’t LLM immaturity or missing features – it’s structural. LLMs can’t reliably tell malicious instructions from benign ones. To them, instructions are just text – there’s no mechanism to separate a genuine request from an attack hidden in the context. And because attackers can always invent new phrasings, the exploit surface is endless.

And if so, I wonder how long it will take before the penny drops on this.

Edit: I originally described the third element of Simon’s trifecta as “external actions”. I’ve updated this to align with Simon Willison’s original article, and instead expanded on the external actions point (partly after checking with Simon).

Maybe it wasn’t the tech after all

Tech gets the headlines, org change gets the results

What if most of the benefit from successful technology adoption doesn’t come from the technology at all? What if it comes from the organisational and process changes that ride along with it? The technology acted as a catalyst – a reason to look hard at how work gets done, to invest in skills, and to rethink decision-making.

In fact, those changes could have been made without the technology – the shiny new tech just happened to provide the justification. Which raises the uncomfortable question: if we know what works, why aren’t we doing it anyway?

This is a bit of a thought piece, a provocation. It would be absurd to say new technologies have no benefit whatsoever (especially as a technologist). However it’s worth bearing in mind, that despite decades of massive investment, and wave after wave of new technologies since the 1950s (each time touted as putting us on the cusp of “the next industrial revolution”), I.T. has delivered only modest direct productivity gains (hence the Solow Productivity Paradox).

What the evidence does show, overwhelmingly, is that technology adoption only succeeds when it comes hand-in-hand with organisational change.

So perhaps it wasn’t really the technology all along – perhaps it was the organisational change?

The diet plan fallacy

People often swear by a specific diet – keto, intermittent fasting, Weight Watchers. But decades of research in nutrition shows that the core mechanism is always the same: consistent calorie balance, exercise, and sustainable habits. 1 The diet brand is the hook. The results come from the same behaviour changes.

Successful digital transformations, cloud adoptions, ERP rollouts, or CRM programmes, the tech often get the credit when performance improves. But beneath the surface, perhaps the real gains come from strengthening organisational foundations – many of which could have been done anyway. Processes are simplified, decision-making shifts closer to the front line, teams gain clearer responsibilities, and skills are developed.

It just happens that the diet book – or the new platform – created the focus to do it.

What the research tells us

Technology only works when it comes with organisational change

Economists have long argued that IT delivers value only when combined with complementary organisational change. Bresnahan, Brynjolfsson and Hitt found that firms saw productivity gains when IT adoption came alongside workplace reorganisation and new product lines – but not from IT on its own.2

Brynjolfsson, Hitt and Yang went further, showing that the organisational complements to technology – such as new processes, incentives and skills – often cost more than the technology itself. Though largely intangible, these investments are essential to capturing productivity gains, and help explain why the benefits of IT often take years to appear. 3

Tech without organisational change delivers little benefit

Healthcare provides a good example. Hospitals that simply digitise patient records, without redesigning their workflows, see little improvement. But when technology is paired with process simplification and Lean methods, the results are very different – smoother patient flow, higher quality of care, and greater staff satisfaction. Once again, it’s the organisational change that unlocks the value, not the tool alone. 4

Anecdotally, I have seen it time and time again in my career. Most digital transformation attempts fail. Countless ERP rollouts, that not only overran, but ended up with orgs in a worse situation that they started – sub optimal processes now calcified in systems that are expensive and hard to change.

Better management works without new tech

Bloom et al introduced modern management practices into Indian textile firms – standard procedures, quality control, performance tracking. Within a year, productivity rose 11–17 percent. Crucially, these gains came not from new machinery or IT investment, but from better ways of working. In fact, computer use increased as a consequence of adopting better management practices, not as the driver. 5

Large-scale surveys across thousands of firms show the same pattern: management quality is consistently linked to higher productivity, profitability and survival. The practices that matter are not about technology, but about how organisations are run – setting clear, outcome-focused targets, monitoring performance with regular feedback, driving continuous improvement, and rewarding talent on merit. These disciplines also enable more decentralised decision-making, empowering teams to solve problems closer to the work. US multinationals outperform their peers abroad not because they have better technology, but because they are better managed. 6

AI: today’s diet plan – and why top-down continues to fail

AI adoption is largely failing. Who could have imagined? Despite the enormous hype, most pilots stall and broader rollouts struggle to deliver. McKinsey’s global AI survey finds that while uptake is widespread, only a small minority of companies are seeing meaningful productivity or financial gains.7

And those that are? Unsurprisingly, they’re the ones rewiring how their companies are run.8 BCG reports the same pattern: the organisations capturing the greatest returns are the ones that focus on organisational change – empowered teams, redesigned workflows, new ways of working – not those treating AI as a technology rollout. As they put it, “To get an AI transformation right, 70% of the focus should be on people and processes.” 9 (you may well expect them to say that of course).

Yet many firms are rushing headlong into the same old mistakes, only this time at greater speed and scale. “AI-first” mandates are pushed from the top down, with staff measured on how much AI they use instead of whether it creates any real value.

Lessons from software development

The DevOps Research and Assessment (DORA) programme 10 has spent the past decade studying what makes software teams successful. Its work draws on thousands of organisations worldwide and consistently shows that the biggest performance differences come not from technology choices, but from practices and processes: culture, trust, fast feedback loops, empowered teams, and continuous improvement 11.

Cloud computing was the catalyst for the DevOps movement, but you could have been doing all of these things anyway without any whizzy cloud infrastructure.

Now GenAI code generation is exposing similar. All the evidence to date is suggesting productivity and quality gains only appear when teams already have strong engineering foundations: highly automated build, test and deployment and frequently releasing small incremental changes. Without these guardrails, AI just produces poor-quality code at higher speed 12 and exacerbates downstream bottlenecks 13.

And none of this is really new. Agile and Extreme Programming largely canonised all the practices advocated for by the DevOps movement more than 20 years ago – themselves rooted in the management thinking of Toyota and W. Edwards Deming in post-war Japan. They’ve always been valuable – with or without cloud, with or without AI.

Maybe (hopefully) GenAI will be the catalyst for broader adoption of these practices, but it begs the question: if teams have to adopt them in order to get value from AI, where is most of the benefit really coming from – the AI tools themselves, or the overdue adoption of ways of working that have proven to work for decades? Just as with diet plans, the results come from habits that were always known to work.

Tech gets the credit, but does it deserve it?

The headlines almost always go to the technology. It’s more exciting – and easier to draw clicks – to talk about shiny new tools than the slow, unglamorous work of redesigning processes, clarifying roles, and building management discipline. Much of what passes for “transformation success” in the press is little more than PR puff, often sponsored by the vendors who sold the system or the consultants who implemented it (don’t even get me started on the IT awards industry).

Its the same inside organisations too. It’s far more palatable to credit the expensive system you just bought than to admit the real benefit came from the hard graft of changing how people work – the organisational equivalent of eating better and exercising consistently.

The fundamentals that always matter

Looking back across the evidence, there’s a clear pattern, The same fundamentals show up time and again:

- Clarity of purpose and outcomes – setting clear, measurable goals and objectives that link to business results, not tool rollout metrics.

- Empowered teams and decentralised decisions – letting people closest to the work solve problems and take ownership.

- Tight feedback loops – between users and builders, management and operations, experiment and learning.

- Continuous improvement culture – fixing problems iteratively as they emerge.

- Investment in people and process – developing skills, aligning incentives, and embedding routines and management systems that build lasting and adaptable capability.

None of these depend on technology. They’re just good, well proven, organisational practices.

So maybe it wasn’t the technology that delivered the transformation at all? Maybe the real gains came from the organisational changes – the better management, the clearer goals, the empowered teams – changes that could have been made without the technology in the first place? The shiny new tool just gave us the excuse.

The obvious question then is: why wait for the latest wave of tech innovation to do all this?

- Effect of Low-Fat vs Low-Carbohydrate Diet on 12-Month Weight Loss in Overweight Adults. Gardner et al 2018 ↩︎

- Information Technology, Workplace Organization and the Demand for Skilled Labor: Firm-Level Evidence ↩︎

- Intangible Assets: Computers and Organizational Capital ↩︎

- Electronic patient records: why the NHS urgently needs a strategy to reap the benefits. April 2025 ↩︎

- Does management matter? Evidence from India, Bloom et al 2012 ↩︎

- Measuring and explaining management practices across firms and countries. Bloom et al 2007 ↩︎

- McKinsey: The state of AI: How organizations are rewiring to capture value ↩︎

- McKinsey: Seizing the agentic AI advantage ↩︎

- BCG: Wheres the value in AI Adoption ↩︎

- DORA ↩︎

- DORA Capability Catalog ↩︎

- GitClear AI Copilot Code Quality Report 2025 ↩︎

- Faros AI: AI Engineering Report July 2025 ↩︎

Lessons from the Solow Productivity Paradox

In 1987, economist Robert Solow observed: “You can see the computer age everywhere but in the productivity statistics.” This became known as the Solow Productivity Paradox.

Despite huge investment in IT during the 1970s and 80s, productivity growth remained weak. The technology largely did what it promised – payroll, inventory, accounting, spreadsheets – but the gains didn’t show up clearly in the data. Economists debated why: some pointed to measurement problems, others to time lags, or the need for organisations to adapt their processes. It wasn’t until the 1990s that productivity growth really picked up, which economists put down to wider IT diffusion combined with organisational change.

We’re seeing something very similar with GenAI today:

- Massive investment

- As yet, very little evidence of any meaningful productivity impact

- Organisations trying to bolt GenAI onto existing processes rather than rethinking them

But there’s also an additional wrinkle this time – a capability misalignment gap.

In the 70s–80s, IT, whilst crude compared to what we have today, was generally capable for the jobs it was applied to. The “paradox” came from underutilisation and lack of organisational change – not because the tech itself failed at its core promises.

With GenAI, the technology itself isn’t yet reliable enough for many of the tasks people want from it – especially those needing precision, accuracy or low fault tolerance. For business-critical processes, it simply isn’t ready for prime time.

That means two things:

- If it follows the same path as earlier IT, we could be a decade or more away from seeing any meaningful productivity impact.

- More importantly, technology alone rarely moves the productivity needle. Impact comes when organisations adapt their processes and apply technology where it is genuinely fit-for-purpose.

New research shows AI-assisted coding isn’t moving delivery

Yet another study showing that AI-assisted coding isn’t resulting in any meaningful productivity benefits.

Faros AI just analysed data from 10,000+ developers across 1,255 teams. Their July 2025 report: The AI Productivity Paradox confirms what DX, DORA and others have already shown: GenAI coding assistants may make individual developers faster, but organisations are not seeing meaningful productivity gains.

Some of the findings:

- Developers using AI complete 21% more tasks and merge 98% more PRs

- But review times are up 91%, PRs are 154% bigger, and bugs per dev up 9%

- Delivery metrics (lead time, change failure rate) are flat

- At the company level: no measurable impact

Why? Because coding isn’t the bottleneck.

The report itself says it: “Downstream bottlenecks cancel out gains”. Review, testing, and deployment can’t keep pace, so the system jams.

Exactly the point I made in my recent post – only a small amount of overall time is spent writing the code, the majority is queues and inspection. Speeding up coding just piles more into those already bloated stages.

It isn’t really a paradox. It’s exactly what queuing theory and systems thinking predict: speeding up one part of the system without fixing the real constraint just makes the bottleneck worse.

“Typing is not the bottleneck” – illustrated

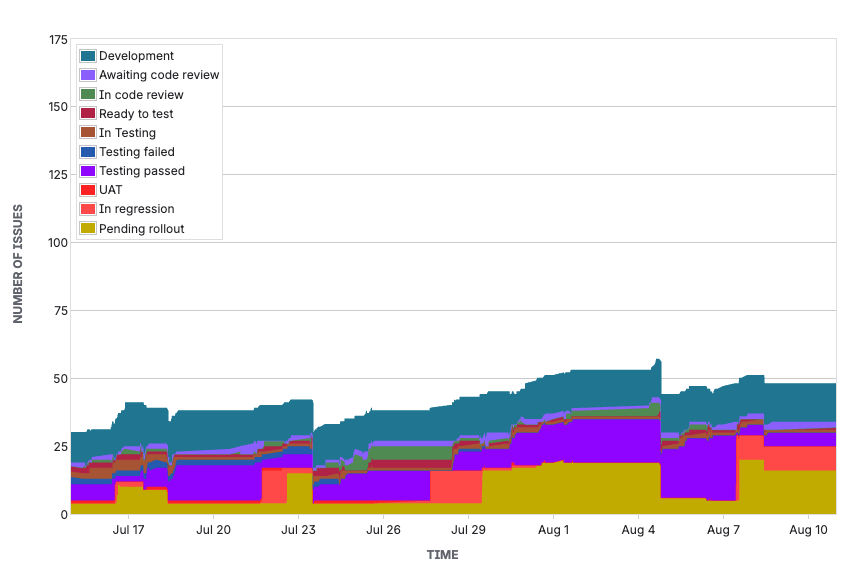

“Typing is not the bottleneck” – illustrated. If you’ve followed me for a while, you’ll know how often I say this – especially since the rise of AI-assisted coding. Here’s an example.

This is a cumulative flow diagram from Jira for a real development team. It shows work only spends around 30% of its time in the value-creation stage (Development). The other 70% is taken up by non-value-adding activities – labour and time-intensive manual inspection steps such as code reviews, feature and regression testing, and work sitting in idle queues waiting to be reviewed, tested and released.

This pattern is the norm. This example isn’t even a bad case (they at least look to be shipping to prod once or twice a week).

If you increase the amount of work in Development – whether through adding more devs, or because of GenAI coding – it increases the amount of work going into all the downstream stages as well. More waiting in idle queues, more to test, bigger and more risky releases, most likely resulting in slower overall delivery.

It’s a bit like trying to drive faster on a congested motorway.

The solution is Continuous Delivery – being able to reliably and safely ship to production in tiny chunks, daily or even multiple times a day – one feature, one bug fix at a time. Not having to batch work up because testing and deployment have such a large overhead.

If your chart looks anything like this, you’ll need to eliminate most, if not all, non-development bottlenecks – turn the ratio on its head – otherwise you’ll probably not see any producivity benefits from GenAI coding.

Is AI about to expose just how mediocre most developers are?

Most code is crap, most developers are mediocre. In the age of AI-assisted coding, that’s a problem for them and the industry.

Like many people, I’ve been of the belief that AI would not replace developers. I still don’t think it will – at least not directly. What it will do is change the economics of the profession by exposing just how much of it is built on mediocrity.

The uncomfortable truth is that most code is crap, and most developers are mediocre. AI-generated code is crap too, but it often matches – and is arguably better than – what many humans produce. When that level of work can be generated instantly, the market value of mediocrity starts to fall.

AI code is slightly less crap

I’ve been looking at codebases generated by Lovable, an AI tool that creates entire applications. I chose Lovable because its output is almost entirely AI-generated, with minimal human input, giving a clearer view of what AI produces. The output is not good, but it’s often no worse than what I’ve seen from human teams throughout my career.

In some ways, it’s better. You don’t get commented-out code left to rot, abandoned TODOs, outdated or misleading comments. Naming is often better. It can still be wrong or misleading, but not to the same extent as using generic, meaningless names like x, data, or processThing that mean nothing to the next person reading it.

Where Lovable code is no better is in the underlying design. Deeply nested conditional logic, tightly coupled code, duplication, poor separation of concerns, and framework anti-patterns – the same structural flaws that sink most human-written systems.

This isn’t surprising – AI is trained on the code that’s out there, and most of that code is crap.

Crap code is tolerated

Despite the fact most code is crap, the world keeps turning. I’ve seen many founders exit before the cracks in their technology had time to widen enough to hurt them. I’ve seen developers move on long before the consequences of their decisions landed. That time lag has allowed mediocrity to thrive.

Most organisations don’t even recognise what good engineering looks like. They treat software development as a commodity – a manufacturing production line – measured by how many features are shipped rather than whether the right outcomes are achieved. Few understand the value of investing in modern software engineering best practices and design – the things that make those outcomes sustainable.

Slow delivery, high defect rates, and spiralling maintenance costs are tolerated because they’re seen as normal – the way software has always been. The waste is staggering, but invisible to those who have never seen better. And until now, that delay in consequences has made it easier to live with.

With AI, consequences arrive sooner

AI removes that comfort zone. It speeds up the creation of code, but it also accelerates the arrival of the problems in that code. When you produce crap code faster, you hit the wall of maintainability much sooner.

For startups, more will now hit that wall before they reach an exit. In large enterprises or government departments, it could mean critical systems becoming unmaintainable years ahead of budget or replacement cycles.

For mediocre developers, AI is not a lifeline – it won’t make a poor engineer better. It’s matched the floor but not raised the ceiling. It simply lets them churn out crap code faster, so the consequences hit them and their teams sooner.

Mediocre developers are exposed

Mediocre developers – again, the majority of devs – may see themselves as experienced but are really just fast at producing code. For years, many have been able to pass as “senior” because they could churn out more code than less experienced colleagues, even if that code was crap.

With AI assistance becoming the norm, speed of output is no longer a differentiator. AI can match their pace and baseline quality (crap) so their supposed advantage disappears. And because the consequences of their bad code arrive much sooner, the cover that once let them move on or get promoted before their work collapsed under its own weight is gone. Their weaknesses are visible in real time, and their value to employers drops.

Many developers seem content to work in a factory fashion – spoon-fed Jira tickets, avoiding customers and the wider organisation, staying in their insulated bubble, and keeping their heads down just writing (crap) code. Those are the ones most at risk.

Why good engineers will only get more valuable

Good engineers apply modern best practices – automated testing, refactoring, small and frequent releases, continuous delivery – and design systems to stay adaptable under change. They pair this with a product mindset, making technical decisions in service of real user and business outcomes. It’s currently being labelled “product engineering” and talked about as the hot new thing, but it’s essentially agile software development as it was originally intended.

In the AI-assisted era, these aren’t just nice-to-have skills – they’re the only way to get meaningful benefit. Without them, AI simply helps teams create bad software faster.

Funnily enough, AI struggles just as much – if not more – with crap code as humans do. That’s not surprising when you remember LLMs are trained on human output. They’re built to mimic human reasoning patterns, so in clean, well-structured code they can do well, but in messy, inconsistent codebases they stumble – sometimes worse than a human – because they’re tripping over the same poor context we do.

The uncomfortable truth is that very few people in the industry can do this well. By my estimate, perhaps 15-20% of developers have deep, well-rounded engineering experience. Fewer than 10% have worked extensively with modern XP-style practices in a genuinely high-performing environment. Combine those skills with the ability to use AI coding tools effectively, and you might be looking at 5% of the industry currently.

A problem for the industry

Demand for genuinely good engineers will rise, but the supply is nowhere close to meeting it. AI will expose and devalue mediocre developers, yet it cannot replace the skills it reveals as missing. That leaves a gap the industry is not ready to fill.

In the short term, this could cause real delivery problems. AI in the hands of mediocre developers will accelerate the maintenance burden (“technical debt”). Some organisations may even retreat from using AI to help develop software if it becomes clear that it is making their systems harder – and more expensive – to maintain.

I do not know what the solution is. What happens next is unclear. It may take years for enough engineers to gain the depth of experience and the mindset needed to thrive in this environment.

In the meantime, the industry will have to navigate a period where the ability to tolerate mediocrity has fallen, the value of raw output has collapsed, and the expertise needed to replace it is in critically short supply.

No more comfort zone for mediocrity

One thing is certain: the comfort zone is gone. For decades, mediocrity could hide in plain sight, shielded by the slow arrival of consequences. AI removes that shield – and leaves nowhere to hide.

On Entitlement

I expect this won’t go down well, but I feel it needs to be said.

Firstly, bear with me – I want to start by talking about how I ended up in this industry.

I came out of uni heading nowhere. Meandered into a job as a pensions administrator. I was seriously considering becoming an IFA, not out of passion or ambition, just because I didn’t have any better ideas.

Then I got lucky. Someone I knew started a startup – like Facebook for villages (before Facebook existed). I picked up coding again (I’d played around as a kid). From there I blagged a job at another startup as an content editor, writing articles about online shopping. Then I blagged a job at Lycos as a “Web Master”.

Right place, right time. I was lucky. I benefitted from the DotCom boom. I fell on my feet. I still pinch myself every day.

I think about teachers and nurses – low pay, long hours, no real choice about where or how they work. I think about other well-paid knowledge professions – doctors, lawyers, architects – years of education, working brutal hours, often in demanding environments.

Most of the places I’ve worked had food and drinks on tap. Ping pong tables. Games machines. I’ve never had to wear a suit. Most places were progressive, and while the industry doesn’t have a great reputation overall, it’s been far more accommodating of people from different backgrounds, genders, and sexual orientations than many others.

After a long bull run – which peaked post-Covid with inflated salaries and over-promotion – things feel like they are changing.

Being asked to go back into the office a couple of times a week. You can’t just fall into jobs like you used to. And GenAI, of course – currently upending the way we work. A paradigm shift far greater than anything I’ve seen in 25 years of my career.

What we had wasn’t normal. It wasn’t standard. It was unusually good.

We weren’t owed any of this.

We all just got lucky.

“Attention is all you need”… until it becomes the problem

This is an attempt at a relatively non-technical explainer for anyone curious about how today’s AI models actually work – and why some of the same ideas that made them so powerful may now be holding them back.

In 2017, a paper by Vaswani et al., titled “Attention is All You Need”, introduced the Transformer model. It was a genuinely historic paper. There would be no GenAI without it. The “T” in GPT literally stands for Transformer.

Why was it so significant?

“Classical” neural network based AI works a bit like playing Snakes & Ladders – processing one step at a time, building up understanding gradually.

Transformers allow every data point (or token) to connect directly with every other. Suddenly, the board looks more like chess – everything is in view, and relationships are processed in parallel. It’s like putting a massive turbocharger on the network.

But that strength is also its weakness.

“Attention” forces every token to compare itself with every other token. As inputs get longer and the model gets larger, the computational cost doesn’t just increase. It grows quadratically. Double the input, and the work more than doubles.

And throwing more GPUs or more data at the problem doesn’t just give diminishing returns – it can lead to negative returns. This is why, for example, some of the latest “mega-models” like ChatGPT 4.5 perform worse than its predecessor 4.0 in certain cases. Meta is also delaying its new Llama 4 “Behemoth” model – reportedly due to underwhelming performance, despite huge compute investment.

Despite this, much of the current GenAI narrative still focuses on more: more compute, more data centres, more power – and I have to admit, I struggle to understand why.

Footnote: I’m not an AI expert – just someone trying to understand the significance of how we got here, and what the limits might be. Happy to be corrected or pointed to better-informed perspectives.

GenAI Coding Assistant Best Practice Guides

A constantly updated list of guides and best practices for working with GenAI coding assistants.

These articles provide practical insights into integrating AI tools into your development workflow, covering topics from effective usage strategies to managing risks and maintaining code quality.

Importantly, the authors of all these articles state they are continually updating their content as they learn more and the technology evolves.

- Defra’s AI SDLC Playbook offers a structured approach to safely incorporating AI tools throughout the development process. Still the best resource out there in my opinion.

- Atharva Raykar’s article, AI-assisted coding for teams that can’t get away with vibes explores how AI-assisted coding fits into modern software development, highlighting benefits and challenges

- Chris Parson’s article, Coding with AI: How To Do It Well And What This Means, shares hands-on tips for coding alongside AI, as well as how he uses AI more widely in his working life.

- Jeff Fosters article, A minimal workflow for AI assisted coding has some good stuff from his experiences working with Claude Code, CoPilot and working with Agents and things like MCP servers.

There are some books now available on this topic, but they tend to be out of date by the time they are published due to the fast pace of AI development.