The DX AI-assisted engineering: Q4 (2025) impact report offers one of the most substantial empirical views yet of how AI coding assistants are affecting software development, and largely corroborates the key findings from the 2025 DORA State of AI-assisted Software Development Report: quality outcomes vary dramatically based on existing engineering practices, and both the biggest limitation and the biggest benefit come from adopting modern software engineering best practices – which remain rare even in 2025. AI accelerates whatever culture you already have.

Who are DX and why the report matters

DX is probably the leading and most well regarded developer intelligence platform. They sell productivity measurement tools to engineering organisations. They combine telemetry from development tools with periodic developer surveys to help engineering leaders track and improve productivity.

This creates potential bias – DX’s business depends on organisations believing productivity can be measured. But it also means they have access to data most researchers don’t.

Data collection

The report examines data collected between July and October 2025. Drawing on data from 135,000 developers across 435 companies, the data set is substantially larger than most productivity research. It combines:

- System telemetry from AI coding assistants (GitHub Copilot, Cursor, Claude Code) & source control systems (GitHub, Gitlab, BitBucket).

- Self-reported surveys asking about time savings, AI-authored code percentage, maintainability perception, and enablement quality.

Update: However, they aren’t particularly transparent about what data they used to create their findings. They mention how they calculate AI usage (empirical data) and time savings (self reported surveys), but nothing on how they calculated metrics like CFR, which is a notable one in the report.

Key Findings

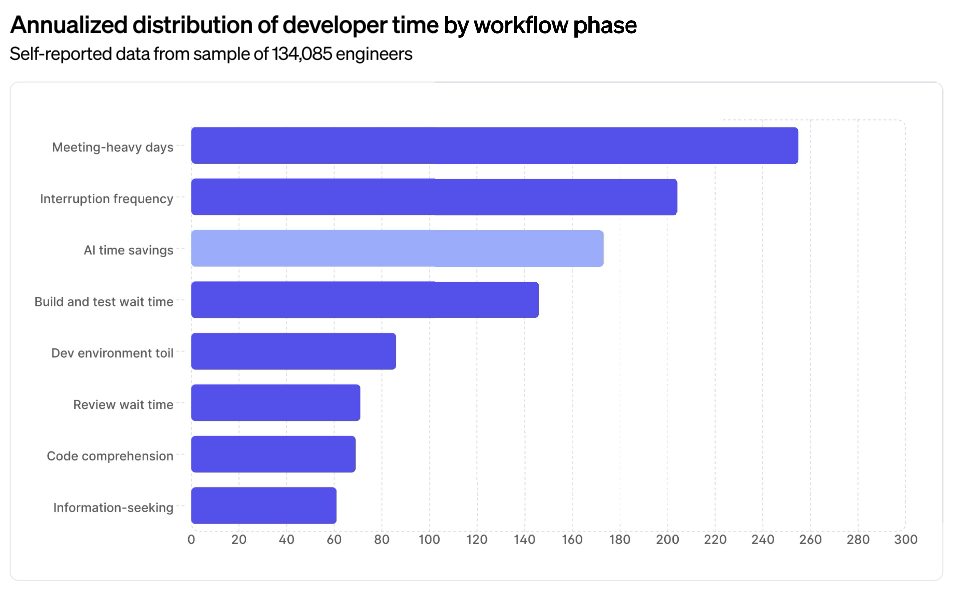

Existing bottlenecks dwarf AI time savings

This should be the headline: meetings, interruptions, review delays, and CI wait times cost developers more time than AI saves. Meeting-heavy days are reported as the single biggest obstacle to productivity, followed by interruption frequency (context switching). Individual task-level gains from AI are being swamped by organisational dysfunction. This corroborates 2025 DORA State of AI-assisted Software Development Report findings that systemic constraints limit AI impact.

You can save 4 hours writing code faster, but if you lose 6 hours to slow builds, context switching, poorly-run meetings, the net effect is negative.

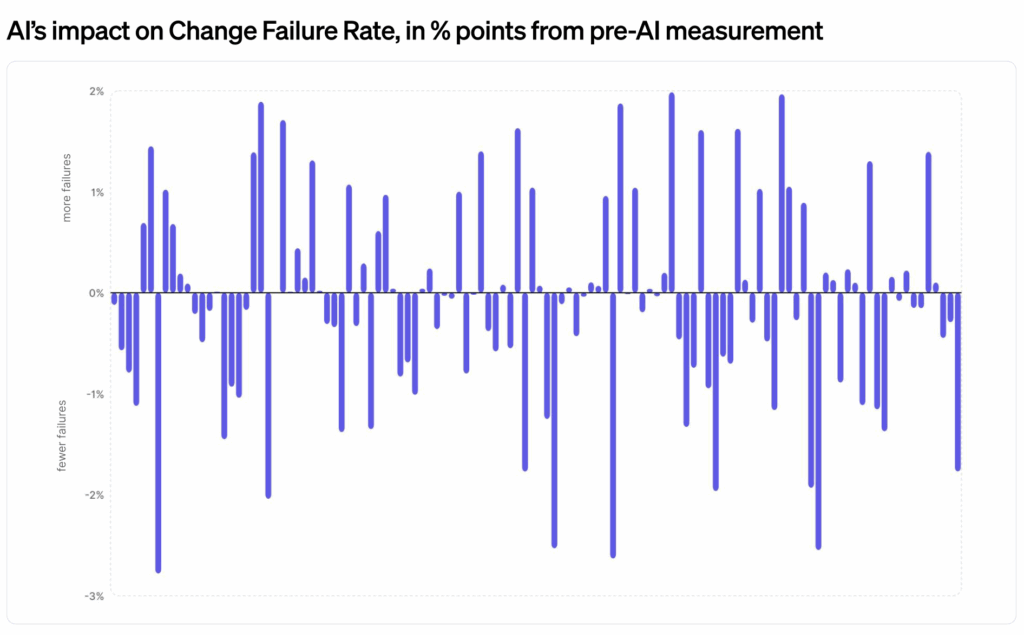

Quality impact varies dramatically

The report tracks Change Failure Rate (CFR) – the percentage of changes causing production issues. Results split sharply: some organisations see CFR improvements, others see degradation. The report calls this “varied,” but I’d argue it’s the most important signal in the entire dataset.

What differentiates organisations seeing improvement from those seeing degradation? The report doesn’t fully unpack this.

Modest time savings claimed, but seem to have hit a wall

Developers report saving 3.6 hours per week on average, with daily users reporting 4.1 hours. But this is self-reported, not measured (see limitations).

More interesting: times savings have plateaued around 4 hours even as adoption climbed from ~50% to 91%. The report initially presents this as a puzzle, but the data actually explains it. The biggest finding, buried on page 20, is – as above – that non-AI bottlenecks dwarf AI gains.

Throughput gains measured, but problematic

Daily AI users merge 60% more PRs per week than non-users (2.3 vs 1.4). That’s a measurable difference in activity. Whether it represents productivity is another matter entirely. (More on this in the limitations section.)

Traditional enterprises show higher adoption

Non-tech companies show higher adoption rates than more native tech orgs. The report attributes this to deliberate, structured rollouts with strong governance.

There’s likely a more pragmatic explanation: traditional enterprises are aggressively rolling out AI tools in hopes of compensating for weak underlying engineering practices. The question is whether this works. If the goal is to shortcut or leapfrog organisational dysfunction without fixing the root causes, the quality degradation data suggests it won’t. AI can’t substitute for modern engineering practices; it can only accelerate whatever practices already exist.

Other findings

- Adoption is near-universal: 91% of developers now use AI coding assistants, matching DORA’s 2025 findings. The report also reveals significant “shadow AI” usage: developers using tools they pay for themselves, even when their organisation provides approved alternatives.

- Onboarding acceleration: Time to 10th PR dropped from 91 days to 49 days for daily AI users. The report cites Microsoft research showing early output patterns predict long-term performance.

- Junior devs use AI most, senior devs save most time: Junior developers have highest adoption, but Staff+ engineers report biggest time savings (4.4 hours/week). Staff+ engineers also have the lowest adoption rates. Why aren’t senior engineers adopting as readily? Scepticism about quality? Lack of compelling use cases for complex architectural work?

Limitations and Flaws

Pull requests as a productivity metric

The report treats “60% more PRs merged” as evidence of productivity gains. This is where I need to call out a significant problem – and interestingly, DX themselves have previously written about why this is flawed.

PRs are a poor productivity metric because:

- They measure motion, not progress. Counting PRs shows how many code changes occurred, not whether they improved product quality, reliability, or customer value.

- They’re highly workflow-dependent. Some teams merge once per feature, others many times daily. Comparing PR counts between teams or over time is meaningless unless workflows are identical.

- They’re easily gamed and inflated. Developers (or AI) can create more, smaller, or trivial PRs without increasing real output. “More PRs” often just means more noise.

- They’re actively misleading in mature Continuous Delivery environments. Teams practising trunk-based development integrate continuously with few or no PRs. Low PR counts in that model actually indicate higher productivity.

Self-reported time savings can’t be trusted

The “3.6 hours saved per week” is self-reported, not measured. People overestimate time savings. As an example. the METR Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity study found developers estimated they’d got a 20% speedup from AI but were actually 19% slower.

Quality findings under-explored

The varied CFR results are the most important finding, but they’re presented briefly and then the report moves on. What differentiates organisations seeing improvement from those seeing degradation? Code review practices? Testing infrastructure? Team maturity?

The enablement data hints at answers but doesn’t fully investigate. This is a missed opportunity to identify the practices that make AI a quality accelerator rather than a debt accelerator.

Missing DORA Metrics

The report covers Lead Time (poorly, approximated via PR throughput) and Change Failure Rate. But it doesn’t measure deployment frequency or Mean Time to Recovery.

That means we’re missing the end-to-end delivery picture. We know code is written and merged faster, but we don’t know if it’s deployed faster or if failures are resolved more quickly. Without deployment frequency and MTTR, we can’t assess full delivery-cycle productivity.

Conclusion

This is one of the better empirical datasets on AI’s impact, corroborating DORA 2025’s key findings. But the real story isn’t in the headline numbers about time saved or PRs merged. It’s in two findings:

Non-AI bottlenecks still dominate.

Meetings, interruptions, review delays, and slow CI pipelines cost more than AI saves. Individual productivity tools can’t fix organisational dysfunction.

As with DORA’s findings, the biggest limitation and the biggest opportunity both come from adopting modern engineering practices. Small batch sizes, trunk-based development, automated testing, fast feedback loops. AI makes their presence more valuable and their absence more costly.

AI is an accelerant, not a fix

It reveals and amplifies existing engineering culture. Strong quality practices get faster. Weak practices accumulate debt faster. The variation in CFR outcomes isn’t noise – it’s the signal. The organisations seeing genuine gains are those already practising modern software engineering. Those practices remain rare.

My advice for engineering leaders:

- Tackle system-level friction first. Four hours saved writing code doesn’t matter if you lose six to meetings, context switching and poor CI infrastructure and tooling.

- Adopt modern engineering practices. The gains from adopting a continuous delivery approach dwarf what AI alone can deliver.

- Don’t expect AI to fix broken processes. If review is shallow, testing is weak, or deployment is slow, AI amplifies those problems.

- Invest in structured enablement. The correlation between training quality and outcomes is strong.

- Track throughput properly alongside quality. More PRs merged isn’t a win if it isn’t actually resulting in shipping faster and your CFR goes up. Measure end to end cycle times, CFR, MTTR, and maintainability.