I’ve just been reading GitClear’s latest report on the impact of GenAI on code quality. It’s not good 😢. Some highlights and then some thoughts and implications for everyone below (which you won’t need to be a techie to understand) 👇

Increased Code duplication 📋📋

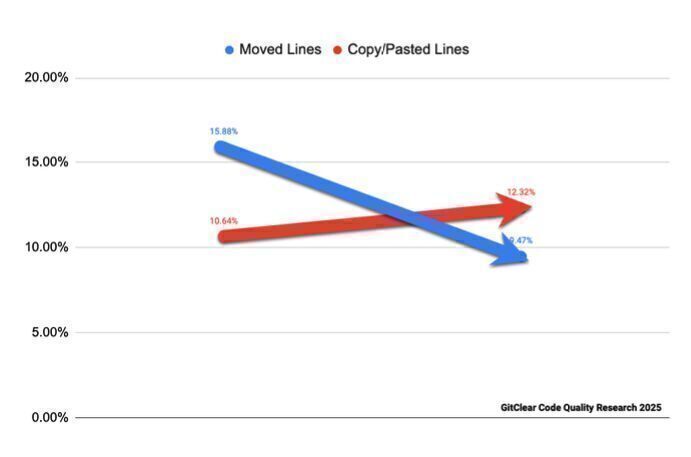

A significant rise in copy-pasted code. In 2024, within-commit copy/paste instances exceeded the number of moved lines for the first time.

Decline in refactoring 🔄

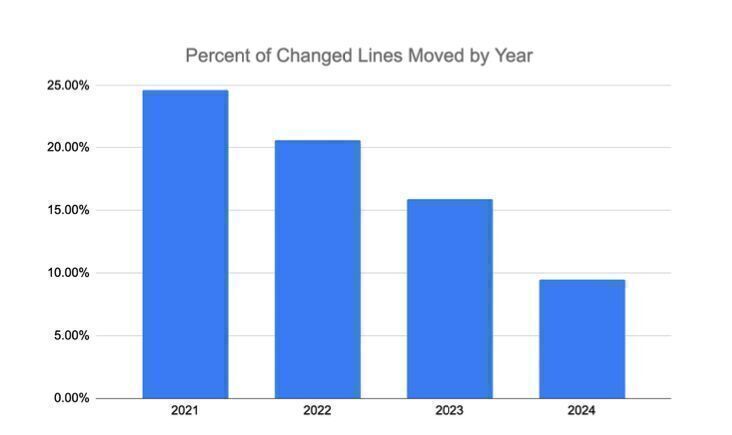

The proportion of code that was “moved” (suggesting refactoring and reuse) fell below 10% in 2024, a 44% drop from the previous year.

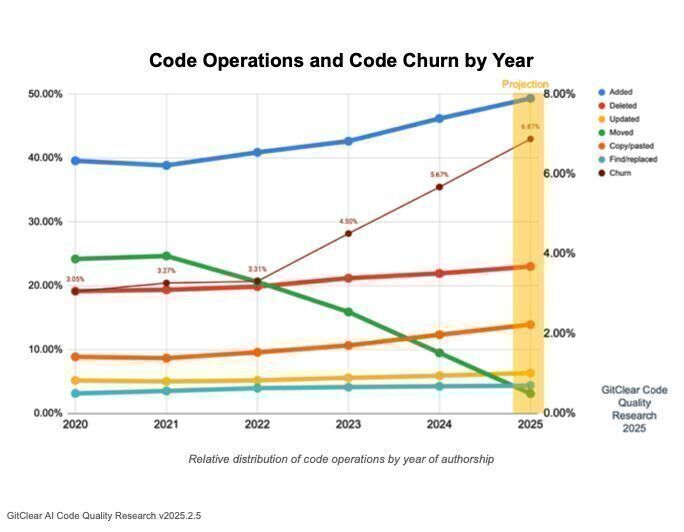

Higher rate of code churn 🔥

Developers are revising newer code more frequently, with only 20% of modified lines being older than a month, compared to 30% in 2020 (suggests poor quality code that needs more frequent fixing).

If you’re not familiar with these code quality metrics, you’ll just need to take my word for it, they’re all very bad.

Thoughts & implications

For teams and organisations

Code that becomes harder to maintain (which all these metrics indicate) results in the cost of change and the rate of defects both going up 📈. As the Gitclear report says, short term gain for long term pain 😫

But is there any short term gain? Most good studies suggest the productivity benefits are marginal at best and some even suggest a negative impact on productivity.

Correlation vs causation

Significant tech layoffs over the same period of the report could also be a factor for some the decline. Either way code quality is suffering badly (and GenAI, at the very least, isn’t helping).

For GenAI

- Models learn from existing codebases. If more low-quality code is committed to repos, future AI models will be trained on that. This could lead to a downward spiral 🌀 of increasingly poor-quality suggestions (aka “Model Collapse”).

- Developers have been among the earliest and most enthusiastic adopters of GenAI, yet we’re already seeing potential signs of quality degradation. If one of the more structured, rule-driven professions is struggling with AI-generated outputs, what does that mean for less rigid fields like legal, journalism, and healthcare?

Pingback: Building Quality In: A practical guide for QA specialists (and everyone else) | Rob Bowley

Pingback: How I use GenAI? – George Harris

Pingback: AI Is still making code worse: A new CMU study confirms | Rob Bowley