OpenAI launched GPT-4.5 yesterday1Sam Altman post on X announcing GTP-4.5 release – a model they’ve spent two years and a fortune training. Initial impressions? Slightly better at some things, but noticeably worse at others2Ethan Mollick’s first impressions of GPT-4.5, and it’s eye-wateringly expensive – 30 times the cost of GPT-4o and 5 times more than their high-end “01 Reasoning” model3OpenAI’s API pricing table.

This follows X’s recent release of Grok3 – only marginally better than most (not all) high end existing models, despite, again, billions spent on training.

Then there’s Anthropic’s recently released Claude Sonnet 3.7 “hybrid reasoning” model. Supposedly tuned for coding, but developers in the Cursor subreddit are saying it’s *worse* than Claude 3.5.

What makes all this even more significant is how much money has been thrown at these next-gen models. Anthropic, OpenAI, and X have collectively spent hundreds of billions of dollars over the past few years (exponentially more than was ever spent on models like GPT-4). Despite these astronomical budgets, performance gains have been incremental and marginal – often with significant trade-offs (especially cost). Nothing like the big leaps seen between GPT-3.0, GPT-3.5 and GPT-4.

This slowdown was predicted by many. Not the least a Bloomberg article late last year highlighting how all the major GenAI players were struggling with their next-gen model (whoever wrote that piece clearly had good sources).

It’s becoming clear that this is likely as good as it’s going to get. That’s why OpenAI is shifting focus – “GPT-5” isn’t a new model, it’s a product4Sam Altman post on X on OpenAI roadmap.

If we have reached the peak, what’s left is a long, slow reality check. The key question now is whether there’s a viable commercial model for GenAI at roughly today’s level of capability. GenAI remains enormously expensive to run, with all major providers operating at huge losses. The Nvidia GPUs used to train these models cost around $20k each, with thousands needed for training. It could take years – possibly decades – for hardware costs to fall enough to make the economics sustainable

Monthly Archives: February 2025

Gitclear’s latest report indicates GenAI is having a negative impact on code quality

I’ve just been reading GitClear’s latest report on the impact of GenAI on code quality. It’s not good 😢. Some highlights and then some thoughts and implications for everyone below (which you won’t need to be a techie to understand) 👇

Increased Code duplication 📋📋

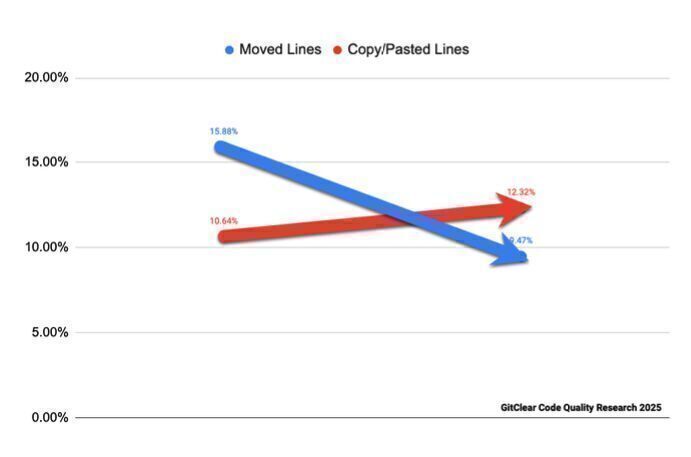

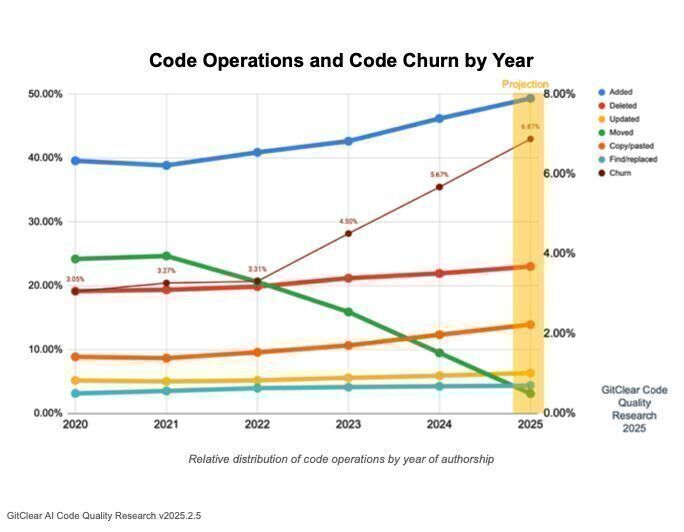

A significant rise in copy-pasted code. In 2024, within-commit copy/paste instances exceeded the number of moved lines for the first time.

Decline in refactoring 🔄

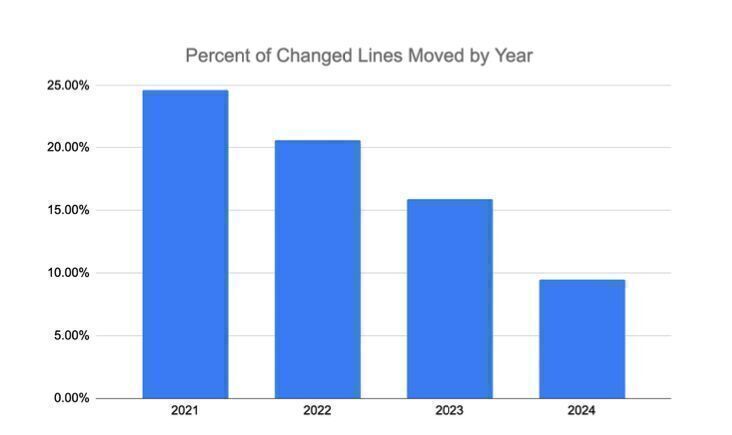

The proportion of code that was “moved” (suggesting refactoring and reuse) fell below 10% in 2024, a 44% drop from the previous year.

Higher rate of code churn 🔥

Developers are revising newer code more frequently, with only 20% of modified lines being older than a month, compared to 30% in 2020 (suggests poor quality code that needs more frequent fixing).

If you’re not familiar with these code quality metrics, you’ll just need to take my word for it, they’re all very bad.

Thoughts & implications

For teams and organisations

Code that becomes harder to maintain (which all these metrics indicate) results in the cost of change and the rate of defects both going up 📈. As the Gitclear report says, short term gain for long term pain 😫

But is there any short term gain? Most good studies suggest the productivity benefits are marginal at best and some even suggest a negative impact on productivity.

Correlation vs causation

Significant tech layoffs over the same period of the report could also be a factor for some the decline. Either way code quality is suffering badly (and GenAI, at the very least, isn’t helping).

For GenAI

- Models learn from existing codebases. If more low-quality code is committed to repos, future AI models will be trained on that. This could lead to a downward spiral 🌀 of increasingly poor-quality suggestions (aka “Model Collapse”).

- Developers have been among the earliest and most enthusiastic adopters of GenAI, yet we’re already seeing potential signs of quality degradation. If one of the more structured, rule-driven professions is struggling with AI-generated outputs, what does that mean for less rigid fields like legal, journalism, and healthcare?

Building Quality In: A practical guide for QA specialists (and everyone else)

Introduction

I wrote this guide because I wanted a useful, practical article to share with QA (Quality Assurance) specialists, testers and software development teams, for how to shift away from traditional testing approaches to defect prevention. It’s also based on what I’ve seen work well in practice.

More than that, it comes from a frustration that the QA role – and the industry’s approach to quality in general – hasn’t progressed as much as it should. Outside of a few pockets of excellence, too many organisations and teams still treat QA as an afterthought.

When QA shifts from detecting defects to preventing them, the role becomes far more impactful. Software quality improves, delivery speeds up, and costs go down.

Whilst this guide is primarily aimed at QA specialists and testers looking to move beyond testing into true quality assurance, it’s also relevant to anyone in software development who is interested in faster, more cost-effective delivery of high-quality, reliable software.

The QA role hasn’t evolved

Something similar happened to QA as it did with DevOps. At some point, testers were rebranded QAs, but largely kept doing the same thing. From what I can see, the majority of people with QA in their title are not doing much actual quality assurance.

Too often, QA is treated as the last step in delivery – developers write code, then chuck it over the wall for testers to find the problems. This is slow, inefficient, and expensive.

Inspection doesn’t improve quality, it just measures a lack of it.

Unlike DevOps (which is a collection of practices, culture and tools, not a job title), I believe there’s still a valuable role and place for QA specialists, especially in larger orgs.

QA’s goal shouldn’t be just to find defects, but to prevent them by embedding quality throughout the development process – not just inspecting at the end. In other words, we need to build quality in.

The exponential cost of late defects and delivery bottlenecks

The cost of fixing defects rises exponentially the later they are found. NASA research1Error Cost Escalation Through the Project Life Cycle Stecklein et al 2004 confirms this, but you don’t really need empirical studies to substantiate this, it’s pretty simple:

The later a defect is found, the more resources have been invested. More people have worked on the it, and fixing it involves more rework – it’s easy to tweak a requirement early, but rewriting code, redeploying, and retesting is much more expensive. In production, they impact users, sometimes requiring hotfixes, rollbacks, and firefighting that disrupts everything else. Beyond direct costs, there’s the cumulative cost of delay – the knock-on effect to future work.

Late-stage testing isn’t just costly – it’s often the biggest bottleneck in delivery. Most teams have far fewer QA specialists/testers than developers, so work piles up at feature testing (right after development) and even more at regression testing. Without automation, regression cycles can take days or even weeks.

As a result, features and releases stall, developers start new work while waiting, and when bugs come back, they’re now juggling fixes alongside new development. Changes are batched into large, high risk releases. Increasing the team size can often end up doing little more than excarcerbated the bottlenecks.

It’s an inefficient and expensive way to build software.

The origins of Build Quality In

“Build Quality In” originates from lean manufacturing and the work of W. Edwards Deming2Wikipedia: W. Edwards Deming and Toyota’s Production System (TPS)3Wikipedia: Toyota Production System. Their core message: Inspection doesn’t improve quality – it just measures the lack of it. Instead, they focused on preventing defects at the source.

Toyota built quality in by ensuring that defects were caught and corrected as early as possible. Deming emphasised continuous improvement, process control, and removing reliance on inspection. These ideas have shaped modern software development, particularly through lean and agile practices.

Despite these well-established principles, QA and testing in many teams hasn’t moved on as much as it should have.

From gatekeeper to enabler

Quality assurance shouldn’t be a primarily late stage checkpoint, it should be embedded throughout the development lifecycle. The focus must shift left. Upstream.

This means working closely with product managers, designers, BAs, developers from the start and all the way through, influencing processes to reduce defects before they happen.

Unless you’re already working this way, it probably means working a lot more collaboratively and pro-actively than you currently are.

Be involved in requirements early

QA specialists should be part of requirements discussions from the start. If requirements are vague or ambiguous, challenge them. The earlier gaps and misunderstandings are addressed, the fewer defects will appear later.

Ensure requirements are clear, understood and testable

Requirements should be specific, well-defined, and easy to verify. QA specialists should work with the team to make sure everyone is clear, and be advising on appropriate automated testing to ensure it’s part of the scope.

Tip: Whilst there are some strong views on the benefit of Cucumber and similar acceptance test frameworks, I’ve found the Gherkin syntax very good for specifying requirements in stories/features, which makes it easier for developers to write automated tests and easier for anyone to take part in manual testing

If those criteria are not met, it’s not ready to start work (and it’s your job to say so). Outside of refinement sessions/discussions, I’m a fan of a quick Three Amigos (QA, Dev Product) before a developer is about to pick up a new piece of work from the backlog

Collaborating with developers

QA specialists and developers should collaborate throughout development, not just at the end. This means pairing on tricky areas and automated tests, being available to provide fast feedback (rather than always waiting for work to e.g. be moved to “ready to to test”), having open discussions about risks and edge cases. The earlier QA provides input, the fewer defects make it through.

Encourage effective test automation

QA should help developers think about testability as they write code. Ensure unit, integration, and end-to-end tests as part of the development process, rather than relying on manual testing later. Guide on the most suitable tests to be implementing (see the test pyramid and testing trophy). If a feature isn’t easily testable, that’s a design flaw to address early.

Tip: If you have little or no automated testing, start with high-level end-to-end tests using tools like Playwright. Begin with simple, frequently executed tests from your manual regression pack and gradually build them up. Most importantly, integrate them into your CI/CD pipeline so they run automatically whenever code is deployed.

Work closely with developers to write these tests – shared ownership is essential. Whenever I’ve seen automation left solely to QA specialists (who are often less experienced in coding), it has failed.

Get everyone involved with manual testing

Manual testing shouldn’t be a bottleneck owned solely by QA. Instead of being the sole tester, be the specialist who enables the team. Teach developers and product managers how to manually test effectively, guiding them on what to look for. (Note: the clearer the requirements, the easier this becomes – good testing starts with well-defined expectations). Having everyone getting involved in manual testing not only removes bottlenecks and dependencies, it tends to mean everyone cares a lot more about quality

Embedding Quality into the SDLC

Most teams have a documented SDLC (Software Development Lifecycle)4Wikipedia: Software Development Lifecycle. But too often, these are neglected documents – primarily there for compliance, rarely referred to and, at best, reviewed once a year as a tick-box exercise. When this happens, the SDLC fails to serve its actual intended purpose: to enable teams to deliver high-quality software efficiently.

An effective SDLC should emphasis building quality in. If it reinforces the idea that quality is solely the QA’s responsibility and the primary way of doing so is late stage testing – it’s doing more harm than good.

QA specialists should work to make the SDLC useful and enabling. This means collaborating with whoever owns it to ensure it focuses on quality at every stage and supports best practices that prevent defects early. It should promote clear requirements, testability from the outset, automation, and continuous feedback loops – not just a final sign-off before release. And importantly, it should be something teams actually use, not just a compliance artefact.

Shifting from reactive to proactive

There are far more valuable things a QA specialist can be doing with their time than manually clicking around on websites. Performance testing, exploratory testing, reviewing static analysis, reviewing for common recurring support issues, accessibility. The list goes on and on. QA should be driving these conversations, ensuring quality isn’t just about finding defects, but about making the entire system stronger.

Quality is a team sport: Fostering a quality culture

The role of QA specialists should be to ensure everyone sees quality as their responsibility, not something QA owns. I strongly dislike seeing developers treat testing as someone else’s job (did you properly test the feature you worked on before handing it over, or did you rush through it just to move on to the next task?)

Creating a quality culture means fostering a shared commitment to building better software. It’s about educating teams on defect prevention, empowering them with the right tools and practices, and making it easy for everyone to care about quality and be involved.

The value of modern QA specialists

I firmly believe QA specialists still have an important role in modern software teams, especially in larger organisations. Their role isn’t disappearing – but it must evolve faster. The days of QA as manual testers, catching defects at the end of the cycle, should be left behind.

The best QA specialists aren’t testers; they’re quality enablers who shape how software is built, ensuring quality is embedded from the start rather than checked at the end.

This isn’t just better for organisations and teams – it makes the QA role a far richer, more rewarding career. On multiple occasions I’ve seen QA specialists who embody this approach go on to become Engineering Managers, Heads of Engineering and other leadership roles.

The demand for people who drive quality, improve engineering practices isn’t going away. If anything, with the rise of GenAI generated code5a recent Gitclear study shows that GenAI generated code is having a negative impact on code quality, it’s becoming more critical than ever.

No, GenAI will not replace junior developers

With the rise of GenAI coding assistants, there’s been a lot of noise about the supposed decline of junior developer roles. Some argue that GenAI can now handle much of the grunt work juniors traditionally did, making them redundant. But this view isn’t just short-sighted – it’s wrong.

I’ve never heard a CTO say they hired junior developers to off load simple tasks to cheaper staff.

Organisations primarily hire junior devs as its seen as a cost effective way to grow their own talent and thus reduce reliance and dependency on external recruitment.

Yes, juniors start with less complex work, but if that’s all they did, they’d never develop into senior engineers – defeating the very purpose of hiring them.

But more than that, junior developers contribute far beyond just writing code, and if anything, GenAI only highlights just how valuable they really are.

Developers only spend a small amount of time coding

As I covered in this article, developers spend surprisingly little time coding. It’s a small part of the job. The real work is understanding problems, solving problems, designing solutions, collaborating with others, and making trade-offs. GenAI might be able to generate some code, but it doesn’t replace the thinking, the discussions, and the understanding that go into good software development.

Typing isn’t the bottleneck. I’ve written about this before, but to reiterate – coding is only one part of what developers do. The ability to work through problems, ask the right questions, and contribute to a team is far more valuable than raw coding speed and perhaps, even deep technical knowledge (go with boring common technology and this is less of a problem anyway).

If coding isn’t the bottleneck, and collaboration, problem-solving, and domain knowledge matter more, then the argument against juniors starts to fall apart.

What juniors bring to the table

One of the best examples I’ve seen of this was when we started our Technical Academy at 7digital. One of our first cohort came from our content ingestion team. They’d played around with coding when they were younger, but had never worked as a developer. From day one, they added value – not because they were churning out lines of code, but because they were inquisitive, challenged assumptions, and made the team think harder about their approach. They weren’t bogged down in the ‘this is how we do things’ mindset. (It also benefited they had great industry and domain knowledge, which meant they could connect technical decisions to real business impact in ways that even some of our experienced developers struggled with).

This is exactly what people often under-appreciate about junior developers. In the right environment, curiosity and problem-solving ability are far more important than years of experience. A good junior can:

- Ask the ‘stupid’ questions that expose gaps in understanding.

- Challenge established ways of working and provoke fresh thinking.

- Improve team communication simply by needing clear explanations.

- Bring insights from other disciplines or domains.

- Provide opportunities to mentor for other developers (to e.g. gain experience as a line manager/engineering manager)

- Grow into highly effective engineers who understand both the tech and the business.

GenAI doesn’t replace the learning process

GenAI might make some tasks easier, but it doesn’t replace the learning process that happens when someone grapples with real-world software development (one challenge, however, is ensuring junior devs don’t become over-reliant on GenAI and still develop fundamental problem-solving skills).

Good juniors add more value than we often realise. They bring energy, fresh perspectives, (and even sometimes, domain knowledge) that makes them valuable from day one. In the right environment, they’re not a cost – they’re an investment in better thinking, better collaboration, and ultimately, better software.

Rather than replacing junior developers, GenAI highlights why we need them more than ever. Fresh thinking, collaboration, and the ability to ask the right questions will always matter more than just getting code written.

And that’s precisely why juniors still matter.

A plea to junior developers using GenAI coding assistants

The early years of your career shape the kind of developer you’ll become. They’re when you build the problem-solving skills and knowledge that set apart excellent engineers from average ones. But what happens if those formative years are spent outsourcing that thinking to AI?

Generative AI (GenAI) coding assistants have rapidly become popular tools in software development, with as many as 81% of developers reporting to use them. 1Developers & AI Coding Assistant Trends by CoSignal

Whilst I personally think the jury is still out on how beneficial they are, I’m particularly worried about junior developers using them. The risk is they use them as a crutch – solving problems for them rather than encouraging them to think critically and solve problems themselves (and let’s not forget: GenAI is often wrong, and junior devs are the least likely to spot its mistakes).

GenAI blunts critical thinking

LLMs are impressive at a surface level. They’re great for quickly getting up to speed on a new topic or generating boilerplate code. But beyond that, they still struggle with complexity.

Because they generate responses based on statistical probability – drawing from vast amounts of existing code – GenAI tools tend to provide the most common solutions. While this can be useful for routine tasks, it also means their outputs are inherently generic – average at best.

This homogenising effect doesn’t just limit creativity; it can also inhibit deeper learning. When solutions are handed to you rather than worked through, the cognitive effort that drives problem-solving and mastery is lost. Instead of encouraging critical thinking, AI coding assistants short-circuit it.

Several studies suggest that frequent GenAI tool usage negatively impacts critical thinking skills.

- A recent study by Gerlich (2025) found a strong negative correlation between GenAI tool usage and critical thinking skills, largely due to cognitive offloading – where individuals delegate thinking to external tools instead of engaging deeply in the problem-solving process. 2AI Tools in Society: Impacts on Cognitive Offloading and the Future of Critical Thinking by Micheal Gerlich, 2025

- Another study by Çela, Fonkam, and Potluri (2024) found similar results and also each unit increase in reliance on GenAI tools, there was a corresponding decrease in problem-solving ability. 3Risks of AI-Assisted Learning on Student Critical Thinking: A Case Study of Albania by Çela, Fonkam, and Potluri (2024)

I’ve seen this happen. I’ve watched developers “panel beat” code – throwing it into an GenAI assistant over and over until it works – without actually understanding why 😢

GenAI creating more “Expert Beginners”

At an entry-level, it’s tempting to lean on GenAI to generate code without fully understanding the reasoning behind it. But this risks creating a generation of developers who can assemble code but quickly plateau.

The concept of the “expert beginner” comes from Erik Dietrich’s well known article. It describes someone who appears competent – perhaps even confident – but lacks the deeper understanding necessary to progress into true expertise.

If you rely too much on GenAI code tools, you’re at real risk of getting stuck as an expert beginner.

And here’s the danger: in an industry where average engineers are becoming less valuable, expert beginners are at the highest risk of being left behind.

The value of an average engineer is likely to go down

Software engineering has always been a high-value skill, but not all engineers bring the same level of value.

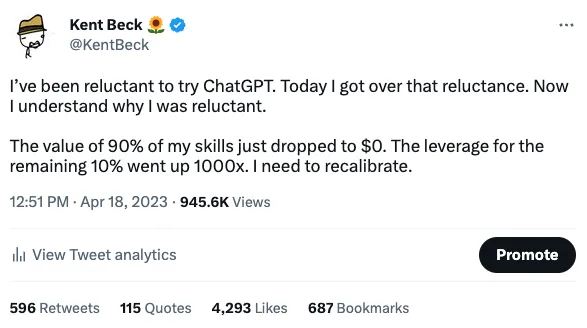

Kent Beck, one of the pioneers of agile development, recently reflected on his experience using GenAI tools:

This is a wake-up call. The industry is shifting. If your only value as a developer is quickly writing pretty generic code, the harsh reality is: if you lean too heavily on AI, you’re risking making yourself redundant.

The engineers who will thrive are the ones who bring deep understanding, strong problem-solving skills, the ability to understand trade-offs and make pragmatic decisions.

My Plea…

Early in your career, your most valuable asset isn’t how quickly you can produce code – it’s how well you can think through problems, how well you can work with other people, how well you can learn from failure.

It’s a crucial time to build strong problem-solving and foundational skills. If GenAI assistants replace the process of struggling through challenges and learning from them (and from more experienced developers), and investing time to go deep into learning topics well, it risks stunting your growth, and your career.

If you’re a junior developer, my plea to you is this: don’t let GenAI tools think for you. Use them sparingly, if at all. Use them in the same way most senior developers I speak to use them – for very simple tasks, autocomplete, yak shaving4 as things stand today, the landscape continues to evolve rapidly, it. But when it comes to solving real problems, do the work yourself.

Because the developers who truly excel aren’t the ones who can generate code the fastest.

They’re the ones who problem solve the best.

The evidence suggests GenAI coding assistants offer tiny gains – real productivity lies elsewhere

GenAI coding assistants increase individual developer productivity by just 0.7% to 2.7%

How have I determined that? The best studies I’ve found on GenAI coding assistants suggest they improve coding productivity by around 5-10%.1see the following studies all published in 2024: The Impact of Generative AI on Software Developer Performance, DORA 2024 Report and The Effects of Generative AI on High Skilled Work: Evidence from Three Field Experiments with Software Developers

However, also according to the best research I could find, developers spend only 1-2 hours a day on coding activity (reading/writing/reviewing code).2see Today was a Good Day: The Daily Life of Software Developers, Global Code Time Report 2022 by Software

In a 7.5-hour workday, that translates to an overall productivity gain of just 0.7% to 2.7%.

But even these figure aren’t particularly meaningful – most coding assistant studies rely on poor proxy metrics like PRs, commits, and merge requests. The ones including more meaningful metrics, such as code quality or overall delivery show the smallest, or even negative gains.

And as I regularly say typing isn’t the bottleneck anyway. The much bigger factors in developer productivity are things like:

- being clear on priorities

- understanding requirements

- collaborating well with others

- being able to ship frequently and reliably.

GenAI might slightly speed up coding activity, but that’s not where the biggest inefficiencies lie.

If you want to improve developer productivity, focus on what will actually make the most difference