Tech gets the headlines, org change gets the results

What if most of the benefit from successful technology adoption doesn’t come from the technology at all? What if it comes from the organisational and process changes that ride along with it? The technology acted as a catalyst – a reason to look hard at how work gets done, to invest in skills, and to rethink decision-making.

In fact, those changes could have been made without the technology – the shiny new tech just happened to provide the justification. Which raises the uncomfortable question: if we know what works, why aren’t we doing it anyway?

This is a bit of a thought piece, a provocation. It would be absurd to say new technologies have no benefit whatsoever (especially as a technologist). However it’s worth bearing in mind, that despite decades of massive investment, and wave after wave of new technologies since the 1950s (each time touted as putting us on the cusp of “the next industrial revolution”), I.T. has delivered only modest direct productivity gains (hence the Solow Productivity Paradox).

What the evidence does show, overwhelmingly, is that technology adoption only succeeds when it comes hand-in-hand with organisational change.

So perhaps it wasn’t really the technology all along – perhaps it was the organisational change?

The diet plan fallacy

People often swear by a specific diet – keto, intermittent fasting, Weight Watchers. But decades of research in nutrition shows that the core mechanism is always the same: consistent calorie balance, exercise, and sustainable habits. 1 The diet brand is the hook. The results come from the same behaviour changes.

Successful digital transformations, cloud adoptions, ERP rollouts, or CRM programmes, the tech often get the credit when performance improves. But beneath the surface, perhaps the real gains come from strengthening organisational foundations – many of which could have been done anyway. Processes are simplified, decision-making shifts closer to the front line, teams gain clearer responsibilities, and skills are developed.

It just happens that the diet book – or the new platform – created the focus to do it.

What the research tells us

Technology only works when it comes with organisational change

Economists have long argued that IT delivers value only when combined with complementary organisational change. Bresnahan, Brynjolfsson and Hitt found that firms saw productivity gains when IT adoption came alongside workplace reorganisation and new product lines – but not from IT on its own.2

Brynjolfsson, Hitt and Yang went further, showing that the organisational complements to technology – such as new processes, incentives and skills – often cost more than the technology itself. Though largely intangible, these investments are essential to capturing productivity gains, and help explain why the benefits of IT often take years to appear. 3

Tech without organisational change delivers little benefit

Healthcare provides a good example. Hospitals that simply digitise patient records, without redesigning their workflows, see little improvement. But when technology is paired with process simplification and Lean methods, the results are very different – smoother patient flow, higher quality of care, and greater staff satisfaction. Once again, it’s the organisational change that unlocks the value, not the tool alone. 4

Anecdotally, I have seen it time and time again in my career. Most digital transformation attempts fail. Countless ERP rollouts, that not only overran, but ended up with orgs in a worse situation that they started – sub optimal processes now calcified in systems that are expensive and hard to change.

Better management works without new tech

Bloom et al introduced modern management practices into Indian textile firms – standard procedures, quality control, performance tracking. Within a year, productivity rose 11–17 percent. Crucially, these gains came not from new machinery or IT investment, but from better ways of working. In fact, computer use increased as a consequence of adopting better management practices, not as the driver. 5

Large-scale surveys across thousands of firms show the same pattern: management quality is consistently linked to higher productivity, profitability and survival. The practices that matter are not about technology, but about how organisations are run – setting clear, outcome-focused targets, monitoring performance with regular feedback, driving continuous improvement, and rewarding talent on merit. These disciplines also enable more decentralised decision-making, empowering teams to solve problems closer to the work. US multinationals outperform their peers abroad not because they have better technology, but because they are better managed. 6

AI: today’s diet plan – and why top-down continues to fail

AI adoption is largely failing. Who could have imagined? Despite the enormous hype, most pilots stall and broader rollouts struggle to deliver. McKinsey’s global AI survey finds that while uptake is widespread, only a small minority of companies are seeing meaningful productivity or financial gains.7

And those that are? Unsurprisingly, they’re the ones rewiring how their companies are run.8 BCG reports the same pattern: the organisations capturing the greatest returns are the ones that focus on organisational change – empowered teams, redesigned workflows, new ways of working – not those treating AI as a technology rollout. As they put it, “To get an AI transformation right, 70% of the focus should be on people and processes.” 9 (you may well expect them to say that of course).

Yet many firms are rushing headlong into the same old mistakes, only this time at greater speed and scale. “AI-first” mandates are pushed from the top down, with staff measured on how much AI they use instead of whether it creates any real value.

Lessons from software development

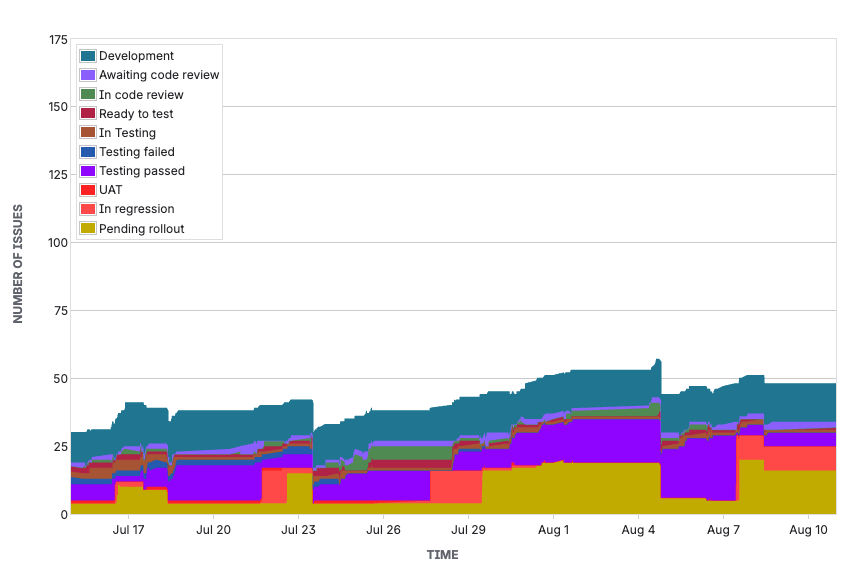

The DevOps Research and Assessment (DORA) programme 10 has spent the past decade studying what makes software teams successful. Its work draws on thousands of organisations worldwide and consistently shows that the biggest performance differences come not from technology choices, but from practices and processes: culture, trust, fast feedback loops, empowered teams, and continuous improvement 11.

Cloud computing was the catalyst for the DevOps movement, but you could have been doing all of these things anyway without any whizzy cloud infrastructure.

Now GenAI code generation is exposing similar. All the evidence to date is suggesting productivity and quality gains only appear when teams already have strong engineering foundations: highly automated build, test and deployment and frequently releasing small incremental changes. Without these guardrails, AI just produces poor-quality code at higher speed 12 and exacerbates downstream bottlenecks 13.

And none of this is really new. Agile and Extreme Programming largely canonised all the practices advocated for by the DevOps movement more than 20 years ago – themselves rooted in the management thinking of Toyota and W. Edwards Deming in post-war Japan. They’ve always been valuable – with or without cloud, with or without AI.

Maybe (hopefully) GenAI will be the catalyst for broader adoption of these practices, but it begs the question: if teams have to adopt them in order to get value from AI, where is most of the benefit really coming from – the AI tools themselves, or the overdue adoption of ways of working that have proven to work for decades? Just as with diet plans, the results come from habits that were always known to work.

Tech gets the credit, but does it deserve it?

The headlines almost always go to the technology. It’s more exciting – and easier to draw clicks – to talk about shiny new tools than the slow, unglamorous work of redesigning processes, clarifying roles, and building management discipline. Much of what passes for “transformation success” in the press is little more than PR puff, often sponsored by the vendors who sold the system or the consultants who implemented it (don’t even get me started on the IT awards industry).

Its the same inside organisations too. It’s far more palatable to credit the expensive system you just bought than to admit the real benefit came from the hard graft of changing how people work – the organisational equivalent of eating better and exercising consistently.

The fundamentals that always matter

Looking back across the evidence, there’s a clear pattern, The same fundamentals show up time and again:

- Clarity of purpose and outcomes – setting clear, measurable goals and objectives that link to business results, not tool rollout metrics.

- Empowered teams and decentralised decisions – letting people closest to the work solve problems and take ownership.

- Tight feedback loops – between users and builders, management and operations, experiment and learning.

- Continuous improvement culture – fixing problems iteratively as they emerge.

- Investment in people and process – developing skills, aligning incentives, and embedding routines and management systems that build lasting and adaptable capability.

None of these depend on technology. They’re just good, well proven, organisational practices.

So maybe it wasn’t the technology that delivered the transformation at all? Maybe the real gains came from the organisational changes – the better management, the clearer goals, the empowered teams – changes that could have been made without the technology in the first place? The shiny new tool just gave us the excuse.

The obvious question then is: why wait for the latest wave of tech innovation to do all this?