Most code is crap, most developers are mediocre. In the age of AI-assisted coding, that’s a problem for them and the industry.

Like many people, I’ve been of the belief that AI would not replace developers. I still don’t think it will – at least not directly. What it will do is change the economics of the profession by exposing just how much of it is built on mediocrity.

The uncomfortable truth is that most code is crap, and most developers are mediocre. AI-generated code is crap too, but it often matches – and is arguably better than – what many humans produce. When that level of work can be generated instantly, the market value of mediocrity starts to fall.

AI code is slightly less crap

I’ve been looking at codebases generated by Lovable, an AI tool that creates entire applications. I chose Lovable because its output is almost entirely AI-generated, with minimal human input, giving a clearer view of what AI produces. The output is not good, but it’s often no worse than what I’ve seen from human teams throughout my career.

In some ways, it’s better. You don’t get commented-out code left to rot, abandoned TODOs, outdated or misleading comments. Naming is often better. It can still be wrong or misleading, but not to the same extent as using generic, meaningless names like x, data, or processThing that mean nothing to the next person reading it.

Where Lovable code is no better is in the underlying design. Deeply nested conditional logic, tightly coupled code, duplication, poor separation of concerns, and framework anti-patterns – the same structural flaws that sink most human-written systems.

This isn’t surprising – AI is trained on the code that’s out there, and most of that code is crap.

Crap code is tolerated

Despite the fact most code is crap, the world keeps turning. I’ve seen many founders exit before the cracks in their technology had time to widen enough to hurt them. I’ve seen developers move on long before the consequences of their decisions landed. That time lag has allowed mediocrity to thrive.

Most organisations don’t even recognise what good engineering looks like. They treat software development as a commodity – a manufacturing production line – measured by how many features are shipped rather than whether the right outcomes are achieved. Few understand the value of investing in modern software engineering best practices and design – the things that make those outcomes sustainable.

Slow delivery, high defect rates, and spiralling maintenance costs are tolerated because they’re seen as normal – the way software has always been. The waste is staggering, but invisible to those who have never seen better. And until now, that delay in consequences has made it easier to live with.

With AI, consequences arrive sooner

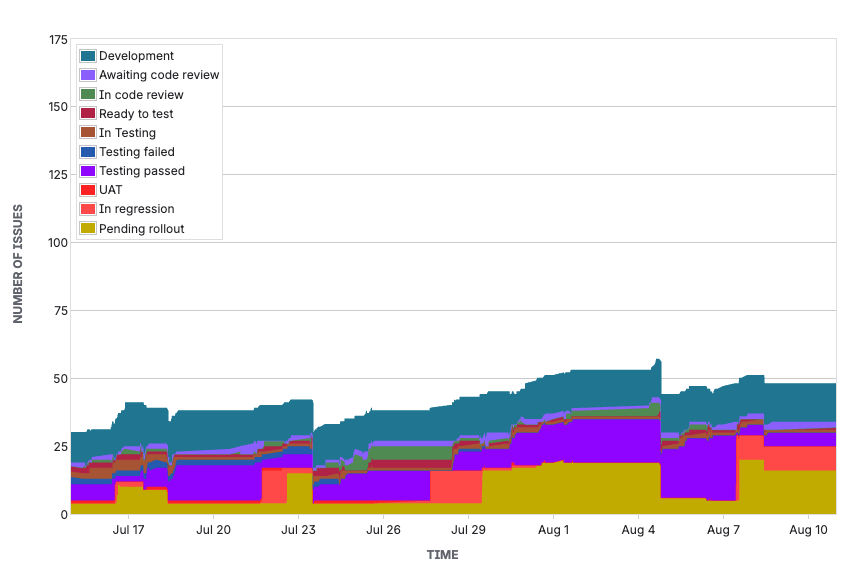

AI removes that comfort zone. It speeds up the creation of code, but it also accelerates the arrival of the problems in that code. When you produce crap code faster, you hit the wall of maintainability much sooner.

For startups, more will now hit that wall before they reach an exit. In large enterprises or government departments, it could mean critical systems becoming unmaintainable years ahead of budget or replacement cycles.

For mediocre developers, AI is not a lifeline – it won’t make a poor engineer better. It’s matched the floor but not raised the ceiling. It simply lets them churn out crap code faster, so the consequences hit them and their teams sooner.

Mediocre developers are exposed

Mediocre developers – again, the majority of devs – may see themselves as experienced but are really just fast at producing code. For years, many have been able to pass as “senior” because they could churn out more code than less experienced colleagues, even if that code was crap.

With AI assistance becoming the norm, speed of output is no longer a differentiator. AI can match their pace and baseline quality (crap) so their supposed advantage disappears. And because the consequences of their bad code arrive much sooner, the cover that once let them move on or get promoted before their work collapsed under its own weight is gone. Their weaknesses are visible in real time, and their value to employers drops.

Many developers seem content to work in a factory fashion – spoon-fed Jira tickets, avoiding customers and the wider organisation, staying in their insulated bubble, and keeping their heads down just writing (crap) code. Those are the ones most at risk.

Why good engineers will only get more valuable

Good engineers apply modern best practices – automated testing, refactoring, small and frequent releases, continuous delivery – and design systems to stay adaptable under change. They pair this with a product mindset, making technical decisions in service of real user and business outcomes. It’s currently being labelled “product engineering” and talked about as the hot new thing, but it’s essentially agile software development as it was originally intended.

In the AI-assisted era, these aren’t just nice-to-have skills – they’re the only way to get meaningful benefit. Without them, AI simply helps teams create bad software faster.

Funnily enough, AI struggles just as much – if not more – with crap code as humans do. That’s not surprising when you remember LLMs are trained on human output. They’re built to mimic human reasoning patterns, so in clean, well-structured code they can do well, but in messy, inconsistent codebases they stumble – sometimes worse than a human – because they’re tripping over the same poor context we do.

The uncomfortable truth is that very few people in the industry can do this well. By my estimate, perhaps 15-20% of developers have deep, well-rounded engineering experience. Fewer than 10% have worked extensively with modern XP-style practices in a genuinely high-performing environment. Combine those skills with the ability to use AI coding tools effectively, and you might be looking at 5% of the industry currently.

A problem for the industry

Demand for genuinely good engineers will rise, but the supply is nowhere close to meeting it. AI will expose and devalue mediocre developers, yet it cannot replace the skills it reveals as missing. That leaves a gap the industry is not ready to fill.

In the short term, this could cause real delivery problems. AI in the hands of mediocre developers will accelerate the maintenance burden (“technical debt”). Some organisations may even retreat from using AI to help develop software if it becomes clear that it is making their systems harder – and more expensive – to maintain.

I do not know what the solution is. What happens next is unclear. It may take years for enough engineers to gain the depth of experience and the mindset needed to thrive in this environment.

In the meantime, the industry will have to navigate a period where the ability to tolerate mediocrity has fallen, the value of raw output has collapsed, and the expertise needed to replace it is in critically short supply.

No more comfort zone for mediocrity

One thing is certain: the comfort zone is gone. For decades, mediocrity could hide in plain sight, shielded by the slow arrival of consequences. AI removes that shield – and leaves nowhere to hide.